UI/UX Trends That Will Shape 2026

I still remember a project from last year. The client wanted an “AI search bar” on every screen. We asked a different question: What job should the interface quietly complete for the user, without them having to ask twice? That little shift—designing for outcome, not ornament—unlocked a 30% drop in support tickets. The lesson stayed with me: 2026 UX is less about shiny widgets and more about invisible wins.

Below is my field guide to the big UI/UX shifts for 2026—written in plain English, with examples you can act on tomorrow. I’ve mixed practical experience with the best research I trust, and I’ll be transparent about sources. I’ll also show how UXGen Studio can help your team move from “we should do this” to “we shipped it.”

1. Multimodal, real-time assistants become the front door

Voice, vision, touch, and text blend into one fluid conversation. It’s no longer “click a button, fill a form.” It’s “show the app your screen, ask for help, watch it act.” In 2024–25, we witnessed mainstream launches that made this a reality: end-to-end multimodal models that process voice, image, video, and text within a single model, with human-like response times in the hundreds of milliseconds. That unlocks hands-busy and eyes-busy situations, such as field work, driving, cooking, and warehouse floors.

What to do now

- Treat your assistant like a product, not a feature. The “top 10 jobs” scope should be completed end-to-end.

- Design handoffs: When should the assistant act on my behalf versus ask for permission?

How UXGen Studio helps

We run “Assistant Journey Sprints”—a 2-week process to map jobs-to-be-done, capture edge cases (such as privacy, consent, and undo), and prototype multimodal flows that users can test on day 5. We anchor feasibility with your actual data and platform limits.

2. “Agentic” UX moves from demo to daily work

Assistants aren’t just answering; they’re taking actions on behalf of users across various apps (booking, filling out forms, monitoring pages, and comparing plans). In 2025, Google outlined a direction for a universal AI assistant, integrating live, agentic capabilities (screen understanding, memory, and computer control) across its products. Expect 2026 users to ask, “Can it just do this for me?”—and mean now.

What to do now

- Design safe autonomy: clear previews (“Here’s what I’ll do”), reversible actions, activity logs, and simple stop/undo.

- Begin with bounded tasks (such as renewals and status checks) before progressing to more complex workflows.

How UXGen Studio helps

We define agentic guardrails as permission models, low-friction confirmations, and “trust UI” (audit trails, rationale, and confidence labels) that reduce anxiety without slowing down power users.

3. Privacy-by-design and on-device AI become table stakes

Users and regulators want intelligence without leaking sensitive data. Apple’s 2024 architecture is a positive signal: on-device models, plus a Private Cloud Compute path designed so that Apple can’t access user data used for specific AI tasks. The UX pattern matters: visible privacy choices, clear explanations of where data goes, and simple ways to say “don’t learn from this.”

What to do now

- Make data flow visible at the moment of action (not hidden in settings).

- Offer frictionless opt-outs for training and personalization.

How UXGen Studio helps

We design consent flows that are respectful and fast—tested with real users for comprehension (not just legal correctness). We also create tiny copy systems that explain AI simply, in your brand voice.

4. Compliance UX gets real: design for the AI Act era

The EU AI Act sets risk-based rules for AI systems, with progressive timelines from 2025 to 2026 and beyond, including transparency and documentation obligations. Even if you’re outside the EU, large customers will ask for proof of your processes. So UX must include interfaces for explanations, provenance, and user notices.

What to do now

- Inventory where your product uses AI (features, models, third parties).

- Add transparency UIs: model purpose, data sources, known limits, and complaint channels.

How UXGen Studio helps

We provide a “Responsible UI kit”—ready-to-drop components for disclosures, provenance badges, model cards, and user recourse. We adapt it to your risk class and guidance from your legal counsel.

5. Content authenticity & provenance show up in the interface

With AI-generated media everywhere, users want to know what’s been edited. The C2PA/Content Credentials standard enables the attachment of provenance to images, videos, and documents in a cryptographically secure manner. In 2024–25, adoption accelerated across big platforms and tools—so expect 2026 users to look for that little “Content Credentials” badge. Your UI should display it and explain it.

What to do now

- Add credibility cues, such as badges, “see edit history,” and “why we trust this.”

- Train support and marketing to speak plainly about authenticity.

How UXGen Studio helps

We integrate C2PA badges into your media viewers and design simple popovers that non-experts understand in 10 seconds.

6. Personalization with user control, not guesswork

Personalization works—when users steer it. E-commerce still loses about 70% of carts on average; intelligent, respectful personalization is one of the few levers that consistently improves cart completion rates. But “creepy” crosses the line fast. Keep the ‘why’ and ‘how’ to change it in plain sight.

What to do now

- Add “Tune my feed” panels and “Because you…” explanations.

- Let users set soft constraints (“avoid X,” “prefer Y,” “show more beginner tips”).

How UXGen Studio helps

We prototype explainable recommendations and run A/B tests that measure trust signals (opt-outs, complaint rates) alongside conversion rates.

7. Accessibility 2.0: beyond checklists to clear effort

WCAG 2.2 is here with added success criteria (for example: Dragging Movements, Accessible Authentication, Focus Not Obscured). However, the heart of 2026 accessibility lies in care: large tap targets, strong focus states, and motion/contrast toggles that are remembered. It’s also good business: more inclusive products widen your market and reduce costs.

What to do now

- Make “reduced motion,” “high contrast,” and “text size” first-class controls.

- Test with assistive tech users, not just automated scanners.

How UXGen Studio helps

We run Accessibility Fix-A-Thons, bringing together developers, designers, QA specialists, and a screen reader expert in the same room to ship fixes live. You leave with a prioritized backlog and verified improvements.

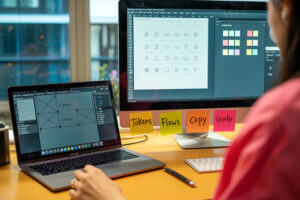

8. Design systems go token-first (and code-native)

Design systems scale when design tokens serve as the single source of truth: color, spacing, typography, motion, and semantic roles are transmitted cleanly from Figma to code. The Design Tokens Community Group has developed an open format to enhance cross-tool interoperability. Tools like Figma now expose variables and tokens, as well as Dev Mode pipelines, that cater to engineering needs. In 2026, teams will be rewarded for treating tokens like infrastructure.

What to do now

- Name tokens semantically (e.g., text/brand-strong), not by hex or size.

- Automate token sync into iOS/Android/Web and docs.

How UXGen Studio helps

We set up token pipelines (e.g., Style Dictionary) and a “Design-to-Code” playbook so that designers, developers, and QA teams share the same values.

9. Performance = perception (Core Web Vitals leveled up)

Google’s Interaction to Next Paint (INP) replaced FID as a Core Web Vital in March 2024. Translation: The web now measures how fast your interface feels across all interactions, not just the initial one. A snappy interface is part of brand trust—and your search visibility. In 2026, teams that budget for responsiveness (not only LCP/CLS) will win.

What to do now

- Track INP in prod (RUM), and set a budget (≤200ms for 75% of visits).

- Kill long tasks, lazy-hydrate, and isolate interaction-critical code paths.

How UXGen Studio helps

We run a Performance Strike Team, profiling, flame charts, and surgical fixes that remove “jank” in high-impact flows—such as checkout, search, and dashboard filters.

10. Spatial and 3D patterns find practical footholds

Head-worn devices and spatial UIs are maturing into functional verticals: training, field service, data visualization, and remote assistance. The significant shift is UX standards—spatial HIGs and ergonomics patterns for comfort, readability, and motion safety—so teams don’t need to reinvent the basics.

What to do now

- Pilot small, high-value 3D moments (e.g., a part-fit check, a spatial tour).

- Follow comfort guidelines religiously: text size, contrast, motion.

How UXGen Studio helps

We storyboard spatial use cases that prove ROI in weeks, not years, and then build the right bridge to your existing web/app ecosystem.

11. Measurement gets serious: design that moves the business

It’s not news anymore: design-driven companies outperform peers on revenue growth and shareholder returns (McKinsey’s long-running research showed top-quartile design performers growing faster than their industries). Two thousand twenty-six leaders will tie design to clear metrics—activation, time-to-task, INP, retention—so prioritization is easier and politics are quieter.

What to do now

- Define one “golden journey” metric per team (e.g., time to first success).

- Instrument experiments; keep a decision log for what you shipped and why.

How UXGen Studio helps

We create a UX Scorecard—a simple, shared dashboard that combines product analytics, UX diagnostics, and business KPIs, ensuring it remains relevant during quarterly planning.

Expert voices

- Jared Spool said, “Design is the rendering of intent.” It’s my favorite reminder to be crystal clear about the outcome that every pixel should serve.

- Google’s Chrome team: INP is now the responsiveness metric that matters. If you remember one performance word in 2026, let it be INP.

How UXGen Studio partners with you

- AI Assistant Sprint (2 weeks): Define jobs, trust rails, and a working prototype your execs can try.

- Responsible UI Kit: Consent, provenance, explanations—drop-in components matched to your risk profile.

- Token Pipeline Setup: Standardized design tokens, synced to code, with review workflows.

- Accessibility Fix-A-Thon: Hands-on Remediations Aligned with WCAG 2.2.

- Performance Strike Team: Measure and improve INP where it moves revenue.

- Spatial Pilot: Identify one realistic 3D or AR use-case, storyboard it, then ship a demo users love.

We’re not fans of guesswork. We’ll bring prototypes in days, not decks in months—and we’ll test them with real users before you commit to a complete build.

A short story to end on

A few months ago, a product manager told me, “Our users never read. We need tooltips.” We sat with five customers. They didn’t want tooltips—they wanted the app to finish the step for them and show what changed. We shipped a tiny agent that auto-filled a complex form (with a clear preview and undo). Support tickets? Down. NPS? Up. Nobody missed the tooltip.

That’s 2026 UX in one scene. Less instruction. More intention.

FAQs

Q1. We’re small. Which trend should we start with?

Start where the friction hurts your users most. For many teams, that’s performance (INP) on key journeys or one agentic flow that saves people 5 minutes a day. Ship one win in 4–6 weeks, then expand.

Q2. Do we need a full “AI assistant,” or can we take baby steps?

Baby steps win. Wrap an agent around a single, tedious chore (renew a plan, file an expense, check a status) with a clear preview and undo. When trust grows, scope grows.

Q3. How do we personalize without being creepy?

Ask for preferences up front, explain “Because you…” next to recommendations, and keep a visible “Tune my feed” panel. It improves conversions and reduces drop-offs.

Q4. What’s the minimum for accessibility in 2026?

Meet WCAG 2.2, test with assistive tech, and keep motion/contrast/text size controls easy to find and sticky. It’s the right thing—and it lowers risk.

Q5. Is a design system worth it for a mid-size app?

If you ship frequently or across multiple platforms, token-first systems quickly pay off. They cut UI drift, speed dev, and make dark mode/brand refreshes almost boring.

Q6. How can we discuss AI and privacy in a way that earns users’ trust?

Be specific at the moment of action: where data runs (on-device vs. cloud), what’s stored, for how long, and how to opt out. Use plain language and show an activity log.

Sources & further reading

- OpenAI on multimodal, real-time models and responsiveness benchmarks. OpenAI

- Google is on a universal AI assistant with live, agentic capabilities. Blog. google

- Apple on Private Cloud Computing and Privacy Architecture. YouTube

- EU AI Act Summaries and Official Overview. C2PAArtificial Intelligence Act

- Content Credentials / C2PA background and adoption. Adobe BlogNielsen Norman GroupFast Company

- Baymard Institute cart abandonment benchmark (2024). Baymard Institute

- WCAG 2.2 at W3C. Mailmodo

- Design Tokens (W3C Community Group) & Figma Variables. Design Tokens Community GroupMcKinsey & Company

- INP replacing FID as a Core Web Vital (Mar 2024). Google for Developersweb.dev

- McKinsey on the business value of design. McKinsey & Company+1

- Jared Spool on intent and design.

Awesome—here are ready-to-post versions for each platform, plus SEO tags for the blog page. I’ve kept the tone human and straightforward, adhering to each platform’s norms (character limits, hashtag counts) to ensure reach isn’t throttled—citations at the end support the platform rules.

We’re not fans of guesswork. We’ll bring prototypes in days, not decks in months—and we’ll test them with real users before you commit to a complete build.

FAQs

Q1. We’re small. Which trend should we start with?

Start where the friction hurts your users most. For many teams, that’s performance (INP) on key journeys or one agentic flow that saves people 5 minutes a day. Ship one win in 4–6 weeks, then expand.

Q2. Do we need a full “AI assistant,” or can we take baby steps?

Baby steps win. Wrap an agent around a single, tedious chore (renew a plan, file an expense, check a status) with a clear preview and undo. When trust grows, scope grows.

Q3. How do we personalize without being creepy?

Ask for preferences up front, explain “Because you…” next to recommendations, and keep a visible “Tune my feed” panel. It improves conversions and reduces drop-offs.

Q4. What’s the minimum for accessibility in 2026?

Meet WCAG 2.2, test with assistive tech, and keep motion/contrast/text size controls easy to find and sticky. It’s the right thing—and it lowers risk.

Q5. Is a design system worth it for a mid-size app?

If you ship frequently or across multiple platforms, token-first systems quickly pay off. They cut UI drift, speed dev, and make dark mode/brand refreshes almost boring.

Q6. How can we discuss AI and privacy in a way that earns users’ trust?

Be specific at the moment of action: where data runs (on-device vs. cloud), what’s stored, for how long, and how to opt out. Use plain language and show an activity log.

Sources & further reading

- OpenAI on multimodal, real-time models and responsiveness benchmarks. OpenAI

- Google is on a universal AI assistant with live, agentic capabilities. Blog. google

- Apple on Private Cloud Computing and Privacy Architecture. YouTube

- EU AI Act Summaries and Official Overview. C2PAArtificial Intelligence Act

- Content Credentials / C2PA background and adoption. Adobe BlogNielsen Norman GroupFast Company

- Baymard Institute cart abandonment benchmark (2024). Baymard Institute

- WCAG 2.2 at W3C. Mailmodo

- Design Tokens (W3C Community Group) & Figma Variables. Design Tokens Community GroupMcKinsey & Company

- INP replacing FID as a Core Web Vital (Mar 2024). Google for Developersweb.dev

- McKinsey on the business value of design. McKinsey & Company+1

- Jared Spool on intent and design.

Awesome—here are ready-to-post versions for each platform, plus SEO tags for the blog page. I’ve kept the tone human and straightforward, adhering to each platform’s norms (character limits, hashtag counts) to ensure reach isn’t throttled—citations at the end support the platform rules.

7 Automation Helpers for a Faster Figma Workflow

10 Small UI Tweaks That Punch Above Their Weight

About the Author

Subscribe for fresh

tips & top articles

UXGen Studio uses the data submitted through this form to send you relevant marketing insights, blog updates, and learning resources. To learn more, read our Privacy Policy.